In a major policy shift for the world’s biggest social media network, Facebook banned white nationalism and white separatism on its platform Tuesday. Facebook will also begin directing users who try to post content associated with those ideologies to a nonprofit that helps people leave hate groups, Motherboard has learned.

The new policy, which will be officially implemented next week, highlights the

malleable nature of Facebook’s policies, which govern the speech of more than 2 billion users worldwide. And Facebook still has to effectively enforce the policies if it is really going to diminish hate speech on its platform.

Last year, a

Motherboard investigation found that, though Facebook banned “white supremacy” on its platform, it explicitly allowed “white nationalism” and “white separatism.” After

backlash from civil rights groups and historians who say there is no difference between the ideologies, Facebook has decided to ban all three, two members of Facebook’s content policy team said.

“We’ve had conversations with more than 20 members of civil society, academics, in some cases these were civil rights organizations, experts in race relations from around the world,” Brian Fishman, policy director of counterterrorism at Facebook, told us in a phone call. “We decided that the overlap between white nationalism, [white] separatism, and white supremacy is so extensive we really can’t make a meaningful distinction between them. And that’s because the language and the rhetoric that is used and the ideology that it represents overlaps to a degree that it is not a meaningful distinction.”

Specifically, Facebook will now ban content that includes explicit praise, support, or representation of white nationalism or separatism. Phrases such as “I am a proud white nationalist” and “Immigration is tearing this country apart; white separatism is the only answer” will now be banned, according to the company. Implicit and coded white nationalism and white separatism will not be banned immediately, in part because the company said it’s harder to detect and remove.

The decision was formally made at Facebook’s Content Standards Forum on Tuesday, a meeting that includes representatives from a range of different Facebook departments in which content moderation policies are discussed and ultimately adopted. Fishman told Motherboard that Facebook COO Sheryl Sandberg was involved in the formulation of the new policy, though roughly three dozen Facebook employees worked on it.

Here's How Facebook Is Trying to Moderate Its Two Billion Users

Fishman said that users who search for or try to post white nationalism, white separatism, or white supremacist content will begin getting a popup that

will redirect to the website for Life After Hate, a nonprofit founded by ex-white supremacists that is dedicated to getting people to leave hate groups.

“If people are exploring this movement, we want to connect them with folks that will be able to provide support offline,” Fishman said. “This is the kind of work that we think is part of a comprehensive program to take this sort of movement on.”

Behind the scenes, Facebook will continue using some of the same tactics it uses to surface and remove content associated with ISIS, Al Qaeda, and other terrorist groups to remove white nationalist, separatist, and supremacist content. This includes content matching, which algorithmically detects and deletes images that have been previously identified to contain hate material, and will include machine learning and artificial intelligence, Fishman said, though he didn’t elaborate on how those techniques would work.

The new policy is a significant change from the company’s old policies on white separatism and white nationalism. In

internal moderation training documents obtained and published by Motherboard last year, Facebook argued that white nationalism “doesn’t seem to be always associated with racism (at least not explicitly).”

That article elicited widespread criticism from civil rights, Black history, and extremism experts, who stressed that “white nationalism” and “white separatism” are often simply fronts for white supremacy.

“I do think it’s a step forward, and a direct result of pressure being placed on it [Facebook],” Rashad Robinson, president of campaign group Color Of Change, told Motherboard in a phone call.

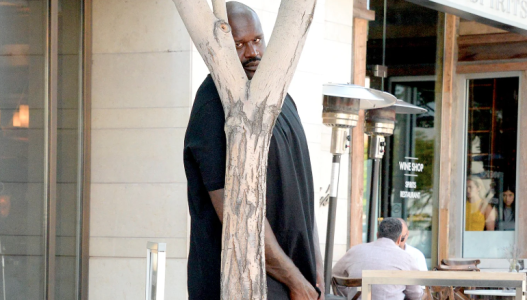

Signs at Facebook's headquarters in Menlo Park, California. Image: Jason Koebler

Signs at Facebook's headquarters in Menlo Park, California. Image: Jason Koebler

Experts say that white nationalism and white separatism movements are different from other separatist movements such as the Basque separatist movement in France and Spain and Black separatist movements worldwide because of the long history of white supremacism that has been used to subjugate and dehumanize people of color in the United States and around the world.

“Anyone who distinguishes white nationalists from white supremacists does not have any understanding about the history of white supremacism and white nationalism, which is historically intertwined,” Ibram X. Kendi, who won a National Book Award in 2016 for

Stamped from the Beginning: The Definitive History of Racist Ideas in America, told Motherboard last year.

Heidi Beirich, head of the Southern Poverty Law Center’s (SPLC) Intelligence Project, told Motherboard last year that “white nationalism is something that people like David Duke [former leader of the Ku Klux Klan] and others came up with to sound less bad.”

While there is unanimous agreement among civil rights experts Motherboard spoke to that white nationalism and separatism are indistinguishable from white supremacy, the decision is likely to be politically controversial both in the United States, where the right has accused Facebook of

having an anti-conservative bias, and worldwide, especially in countries where openly white nationalist politicians have found large followings. Facebook said that not all of the groups it spoke to believed it should change its policy.

"We saw that was becoming more of a thing, where they would try to normalize what they were doing by saying ‘I’m not racist, I’m a nationalist’, and try to make that distinction"

“When you have a broad range of people you engage with, you’re going to get a range of ideas and beliefs,” Ulrick Casseus, a subject matter expert on hate groups on Facebook’s policy team, told us. “There were a few people who [...] did not agree that white nationalism and white separatism were inherently hateful.”

But Facebook said that the overwhelming majority of experts it spoke to believed that white nationalism and white separatism are tied closely to organized hate, and that all experts it spoke to believe that white nationalism expressed online has led to real-world harm. After speaking to these experts, Facebook decided that white nationalism and white separatism are “inherently hateful.”

“We saw that was becoming more of a thing, where they would try to normalize what they were doing by saying ‘I’m not racist, I’m a nationalist’, and try to make that distinction. They even go so far as to say ‘I’m not a white supremacist, I’m a white nationalist’. Time and time again they would say that but they would also have hateful speech and hateful behaviors tied to that,” Casseus said. “They’re trying to normalize it and based upon what we’ve seen and who we’ve talked to, we determined that this is hateful, and it’s tied to organized hate.”

The change comes less than two years

after Facebook internally clarified its policies on white supremacy after the Charlottesville protests of August 2017, in which a white supremacist killed counter-protester Heather Heyer. That included drawing the distinction between supremacy and nationalism that extremist experts saw as problematic.

Facebook quietly made other tweaks internally around this time. One source with direct knowledge of Facebook’s deliberations said that following Motherboard’s reporting, Facebook changed its internal documents to say that racial supremacy isn’t allowed in general. Motherboard granted the source anonymity to speak candidly about internal Facebook discussions.

“Everything was rephrased so instead of saying white nationalism is allowed while white supremacy isn’t, it now says racial supremacy isn’t allowed,” the source said last year. At the time, white nationalism and Black nationalism did not violate Facebook’s policies, the source added. A Facebook spokesperson confirmed that it did make that change last year.

The new policy will not ban implicit white nationalism and white separatism, which Casseus said is difficult to detect and enforce. It also doesn’t change the company’s existing policies on separatist and nationalist movements more generally; content relating to Black separatist movements and the Basque separatist movement, for example, will still be allowed.

A social media policy is only as good as its implementation and enforcement. A

recent report from NGO the Counter Extremism Projectfound that Facebook did not remove pages belonging to known neo-Nazi groups after this month’s Christchurch, New Zealand terrorist attacks. Facebook wants to be sure that enforcement of its policies is consistent around the world and from moderator to moderator, which is one of the reasons why its policy doesn’t ban implicit or coded expressions of white nationalism or white separatism.

David Brody, an attorney with the Lawyers’ Committee for Civil Rights Under Law which lobbied Facebook over the policy change, told Motherboard in a phone call “if there is a certain type of problematic content that really is not amenable to enforcement at scale, they would prefer to write their policies in a way where they can pretend it doesn’t exist.”

Keegan Hankes, a research analyst for the SPLC’s Intelligence Project, added, “One thing that continually surprises me about Facebook, is this unwillingness to recognize that even if content is not explicitly racist and violent outright, it [needs] to think about how their audience is receiving that message.”

"It’s definitely a positive change, but you have to look at it in context, which is that this is something they should have been doing from the get-go"

Facebook banning white nationalism and separatism has been a long time coming, and the experts Motherboard spoke to believe that Facebook was too slow to move. Motherboard first published documents

showing Facebook’s problematic distinction between supremacy and nationalism in May last year; the Lawyer’s Committee

wrote a critical letter to Facebook in September. Between then and now, Facebook’s old policy has remained in place.

“It’s definitely a positive change, but you have to look at it in context, which is that this is something they should have been doing from the get-go,” Brody told Motherboard. “How much credit do you get for doing the thing you were supposed to do in the first place?”

“It’s ridiculous," Hankes added. "The fact that it’s taken this long after Charlottesville, for instance, and then this latest tragedy to come to the position that, of course, white nationalism, white separatism are euphemisms for white supremacy.” He said that multiple groups have been lobbying Facebook around this issue, and have been frustrated with the slow response.

“The only time you seem to be able to get a serious response out of these people is when there’s a tragedy,” he added.

Motherboard raised this criticism to Fishman: If Facebook now realizes that the common academic view is that there is no meaningful distinction between white supremacy and white nationalism, why wasn’t that its view all along?

“I would say that we think we’ve got it right now,” he said.

YouTube full of sickos. That’s disgusting.

YouTube full of sickos. That’s disgusting.